3.1.1 Graph Theory

Problems can be represented as state space graphs. Graph Theory can then be used to analyze the structure and complexity of both the problem and the search procedures used to solve it.

This can help us answer questions like:

"Is solution guaranteed to be optimal?"

"What is complexity of search process in terms of time/memory usage?"

Definitions:

- Graph is set of nodes and arcs/links connecting them.

- Nodes are discrete states in process, e.g. configurations of game board or result of logical inference

- Arcs are transitions between states; e.g. logical inferences or legal moves of game

Graph theory is the best tool for reasoning about structure of objects and relations, e.g. Euler and bridges of Konigsberg.

Graph theory and predicate calculus can both represent problems, but graph theory does better job showing structure of the problem. Concept of node degree is readily apparent with graphing, not at all with predicates.

3.1.2 Finite State Machine

FSM is a finite, directed, connected graph, having a set of states, a set of input values, and a state transition function that describes the effect that the elements of the input stream have on the states of the graph.

FSM's are used to recognize components of a formal language.

Not all states are trees - sometimes states can be reached through multiple paths. This should be taken into account when designing search algorithms. One should consider not only the solution path, but the best solution path.

GL provides several examples of state spaces; all are worth study:

GL provides several examples of state spaces; all are worth study:

- Tic-Tac-Toe

- The 8-Puzzle

- The Traveling Salesman

Basic search methods can perform poorly if they are exhaustive; that is, if they lack methods of reducing the size of the search space. Search complexity can be reduced through tools like branch and bound and nearest neighbor. Branch and bound "cuts off" branches when a set bound is surpassed; e.g. when a branch length surpasses that of a solution path already known. Nearest neighbor chooses the next path element based on its given "proximity" to the current state, wherein proximity is an arbitrary measure for the problem domain.

3.2 STRATEGIES FOR

STATE SPACE SEARCH

Data-Driven Search (Forward Chaining) - takes

facts of problem and applies rules or legal moves to produce new facts, leading

to goal.

Suggested if:

- All or most of data is given in problem statement; e.g. geology solver to determine what minerals at given site

- Large number of potential goals but few rules/ways to use given facts. e.g. DENDRAL finding molecular structure based on formulae.

- Difficult to form goal/hypothesis

Goal-Driven Search (Backward Chaining) - uses

information about goal, finds rules required to produce goal and conditions

necessary for these rules. These conditions become new subgoals.

Suggested if:

- Goal/hypothesis is given or easily determined

- There are large number of rules matching facts of system, which would lead to many conclusions. Starting with goal eliminates many wrong branches.

- Problem data are not given and must be determined by solver. e.g. Doctor only orders test he thinks will confirm diagnosis; he doesn't order every test.

Backtrack Algorithm - stores unprocessed states, dead states, and

nodes to keep track of which have been searched, which are bad, and which

haven't been visited. Avoids repetitive looping.

Along with search direction, algorithms need an order in which to examine states. Right now we'll look at depth-first and breadth-first search as examples of ordering.

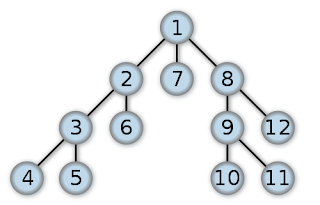

Depth-First Search - analyzes children before siblings. The list of unprocessed nodes is maintained in a LIFO, or stack, fashion whereby newly-discovered nodes (children) are processed first. The above image illustrates the order in which nodes are processed in this stack-based search method:

|

| Tree searched depth-first (source: Wikipedia) |

- Starting at 1, the stack of unprocessed nodes is [2, 7, 8]

- The algorithm goes to node 2

- The children of node 2 are added to the top of the stack: [3,6,7,8]

- 3 is searched next

- The children of 3 are added to stack, which becomes: [4,5,6,7,8]

- And so on...

We see that as more children are discovered, the siblings of previous nodes (7 and 8) are left sitting at the end of the stack.

The space usage of depth-first search is a linear function of the length of the path. So if nodes have an average of B children, the total space usage is B * n to go n levels deep. (p. 106)

Depth-first search may not find the shortest path to a goal state because the children on the path its searching may loop back to a goal on a previous level. This method would have no way of knowing this.

When to use depth-first:

- if solution path is expected to be long. Depth-first search will get into deep space quickly and will not suck up memory by storing siblings.

- When lots of branches exist (for same reason as above).

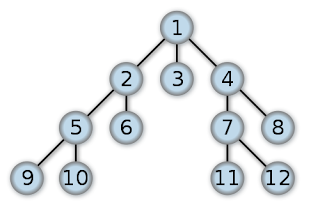

Breadth-First Search - orders states to be processed level by level, so siblings are analyzed before children. This ordering is FIFO and acts as a queue. The following image illustrates this method:

|

| Tree searched breadth-first (source: Wikipedia) |

- The children of state 1 - 2,3,4 - are added to the list of unprocessed nodes: [2,3,4]

- Node 2 is analyzed next.

- It's children - 5 and 6 - are added to the queue: [3,4,5,6]

- Node 3 comes next, and so on...

Breadth-first search will always find the shortest path to a goal, since it goes level by level. This is because the shortest path to a goal is the number of levels between it and the root.

The space usage of breadth-first search is an exponential function of the length of the path, since it adds all nodes on a level to the queue before moving to the next level. If nodes have an average of B children, there will be B^n states on level n.

Breadth-first search is ideal when:

- a simple solution is known to exist

- the branching factor of a graph is low

3.2.4 Depth-First Search with Iterative Deepening

Depth-first iterative deepening combines the small memory usage of depth-first with the thoroughness of breadth-first search. It uses increasing depth-bounds to slowly search the entire space, resulting in it always finding the shortest path to a goal while only using B*n storage space at any level.

All three search strategies discussed here have worst-case exponential time-complexity, which is why heuristics will be used to overcome such uninformed methods.

3.3 Using the State Space to Represent Reasoning with the Propositional and Predicate Calculus

3.3.1 State Space Description of a Logic System

3.3.1 State Space Description of a Logic System

With sound and complete inference rules, graph-based reasoning can be used to find correct conclusions. In short, logic systems can be mapped with graph theory and solved with search methods.

3.3.2 And/Or Graphs

Hypergraphs can be used to accurately depict and/or relations in graph theory. These graphs can have multiple arcs between nodes. For our purposes, and relations will be shown by connecting descendant arcs together with a line.

Note that when solving a node with an and relation, all and descendants must be solved/true.

3.3.3 Further Examples and Applications

Examples considered:

- MACSYMA

- goal-driven and/or search

- Financial Advisor

- English Language Parser and Sentence Generator

Take away:

- Predicate calculus has no global variables - the extent of the meaning of any variable name is contained within the clause in which it appears (i.e. variables are parameters and don't have fixed memory locations)

- Working through examples helps.

(Attribution: these entries are my notes and reflections on George Luger's Artificial Intelligence: Structures and Strategies for Complex Problem Solving. Some of what I write here may be direct from the text. In other words, if something you read strikes you as profound, original, or interesting, I probably didn't write it.)