- inherent ambiguities remove the possibility of an exact solution

- computational costs may be prohibitive.

4.1 Hill-Climbing and Dynamic Programming

4.1.1 Hill-Climbing

Hill-Climbing describes the search strategy of blindly selecting the "best" child of the current node for examination. Because it cannot backtrack, hill-climbing can be easily broken by local maxima or dead ends.

Samuel's 1959 checker's algorithm employed a variation of hill-climbing by attempting to self-improve through modifying its evaluation equation. It attached weighted coefficients to game features (piece advantage, location) and would modify these coefficients on the fly if it began to fare poorly.

4.1.2 Dynamic Programming (DP)

Dynamic Programming utilizes memoization; that is, it tracks solutions to sub-problems for later use in solving the given large problem. This can help boost memory performance by avoiding repetitive calculations. The following two text processing algorithms utilize DP:

1. Optimum global alignment of two strings

For example, the strings BAADDCABDDA and BBADCBA can be aligned as:

BAADDCABDDA

BBA DC B A

To do this we must either shift, insert, or replace characters in order to align the two strings. We can set up the strings in an array and go through each matching point to determine the cost of alignment at that point. Eventually we can move backward through this to find the cheapest alignment.

2. Minimum edit difference

Here we wish to turn one string into another, as cheaply as possible. We set up the two given strings in array fashion as before, but now we calculate the cost at each cell based on if we insert, delete, or replace. Again, we finish by moving through the array along the cheapest path.

For example, to turn intention into execution:

intention

ntention - delete i, cost 1

etention - replace n with e, cost 2

exention - replace t with x, cost 2

exenution - insurt u, cost 1

execution - replace n with c, cost 2

Total cost for this transformation is 1 + 2 + 2 + 2 + 1 + 2 = 8.

So why do Dynamic Programming? Because of its computation cost improvement. Whereas exhaustive search is exponential when comparing two strings (2^n or 3^n), DP costs n^2 or n^3 at worst.

4.2 The Best-First Search Algorithm

4.2.1 Implementing Best-First Search

best-first search utilizes lists to track states: open for states not visited and closed for the previously examined. Unlike depth-first and bread-first search though, best-first also re-orders its open list to keep the most promising states in front. This is termed a priority queue. It also tracks the shortest partial solution path.

In short, best-first search combines the benefits of a shortest-path solution with the flexibility of surviving local maxima and dead ends.

4.2.2 Implementing Heuristic Evaluation Functions

One way to compare similar heuristics is to use an evaluation function, f, such that:

where:

For example, to turn intention into execution:

intention

ntention - delete i, cost 1

etention - replace n with e, cost 2

exention - replace t with x, cost 2

exenution - insurt u, cost 1

execution - replace n with c, cost 2

Total cost for this transformation is 1 + 2 + 2 + 2 + 1 + 2 = 8.

So why do Dynamic Programming? Because of its computation cost improvement. Whereas exhaustive search is exponential when comparing two strings (2^n or 3^n), DP costs n^2 or n^3 at worst.

4.2 The Best-First Search Algorithm

4.2.1 Implementing Best-First Search

best-first search utilizes lists to track states: open for states not visited and closed for the previously examined. Unlike depth-first and bread-first search though, best-first also re-orders its open list to keep the most promising states in front. This is termed a priority queue. It also tracks the shortest partial solution path.

In short, best-first search combines the benefits of a shortest-path solution with the flexibility of surviving local maxima and dead ends.

4.2.2 Implementing Heuristic Evaluation Functions

One way to compare similar heuristics is to use an evaluation function, f, such that:

f(n) = g(n) + h(n)

- g(n) measures the path length from n to the root

- h(n) represents the heuristic estimate of the distance from n to the goal state

With function f we can compare heuristics while taking into account their probability of finding the shortest path. This comes into play if, say, we're comparing two states with identical heuristic evaluations. Whichever of these states is closer to the root will be more likely to lie on the shortest path.

h(n) also provides a breadth-first flavor to an algorithm since it will keep it from being misled too deeply down a search path. As a heuristic travels deeper and deeper into a graph the h(n) term will balloon, causing it to eventually backtrack and pursue a shallower avenue.

4.2.3 Heuristic Search and Expert Systems

Simple games are ideal for learning heuristics because:

4.4.3 The Alpha-Beta Procedure

This method prunes the minimax search space. Two values, alpha and beta, are assigned so that disadvantageous branches are ignored. The alpha value goes with MAX nodes and won't decrease so we can prune MAX grandchildren less than it; the beta goes with MIN nodes and won't increase.

4.5 Complexity Issues

Branching factor - average number of children of any state.

Number of states at depth n is B^n.

(Attribution: these entries are my notes and reflections on George Luger's Artificial Intelligence: Structures and Strategies for Complex Problem Solving. Some of what I write here may be direct from the text. In other words, if something you read strikes you as profound, original, or interesting, I probably didn't write it.)

4.2.3 Heuristic Search and Expert Systems

Simple games are ideal for learning heuristics because:

- They're large enough to benefit from heuristics

- They're complex enough to merit testing by a variety of heuristics so there's a lot we can compare

- They're easy to represent

- States are relatively similar so heuristics can be uniformly applied

Confidence measures (-1 to 1), also known as certainty factors, can be used to quantify how "useful" a conclusion is. For example with the financial adviser system, we may conclude that someone with a lot of savings will want to invest heavily and not save much, but there's always the chance they'll want to save instead. To account for this we can give such a conclusion a certainty factor of 0.9 to show that there's a small chance it's incorrect.

Confidence measures come with their own complexities though; for example, if a conclusion is used as a premise to another expression, how should the confidence measure be factored in?

4.3 Admissibility, Monotonicity, and Informedness

We often wish to evaluate the performance of a heuristic along different metrics.

4.3.1 Admissibility Measures

Admissible heuristics are guaranteed to find the shortest path to a goal state.

A* algorithms utilize best-first-search along with an evaluation function in which h(n) <= minimal path cost from n to goal. For example, breadth-first search is an A* algorithm with h(n) == 0.

I find the notion of "h(n) <= minimal path cost from n to goal" to be confusing. Luger provides an example to help clarify this. He says that with the 8-puzzle, the heuristic of counting the number of tiles not in the goal position is h(n), while the minimal path cost, h*(n), is the number of moves required to move them to their goal position. By these definitions, h(n) < h*(n).

In other words h(n) is simply the output of our heuristic evaluation, while h*(n) is the actual algorithmic cost of reaching the goal state from our present position.

4.3.2 Monotonicity

Monotonicity is basically admissibility applied to all nodes. In other words, the heuristic is everywhere admissible - when a node is visited, it is reached along the shortest path.

4.3.3 Informedness

A heuristic h2(n) is more informed if h2(n) >= h1(n). h2(n) is "closer" to h* and thus is more accurate at searching the given space.

4.4 Using Heuristics in Games

4.4.1 Minimax

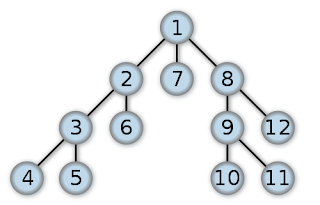

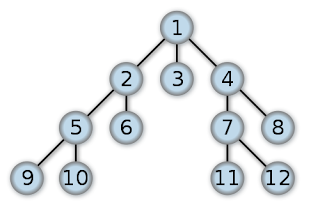

Minimax is used in two-player games. With minimax leaves are scored by heuristic and then selected values are propagated back up the tree. The value of a parent is determined by one of its branches, according to the following rule:

4.4.2 Minimaxing to Fixed Ply Depth

We can use n-ply look-ahead to limit the memory usage of minimax. With this strategy we treat the nodes at level n as the leaves and only propagate n levels.

This method suffers from the horizon effect, wherein we can only look so far ahead and can get trapped by local minima or a clever opponent.

4.3.3 Informedness

A heuristic h2(n) is more informed if h2(n) >= h1(n). h2(n) is "closer" to h* and thus is more accurate at searching the given space.

4.4 Using Heuristics in Games

4.4.1 Minimax

Minimax is used in two-player games. With minimax leaves are scored by heuristic and then selected values are propagated back up the tree. The value of a parent is determined by one of its branches, according to the following rule:

- if the node is a max (i.e. its my turn) then the greatest value is propagated up.

- If the node is a min (i.e. opponent's turn) then the smallest value is propagated up, because this is what the opponent will likely pick.

Note that minimax requires two passes over the search space: one to descend and apply the heuristic, and a second to propagate values back up.

We can use n-ply look-ahead to limit the memory usage of minimax. With this strategy we treat the nodes at level n as the leaves and only propagate n levels.

This method suffers from the horizon effect, wherein we can only look so far ahead and can get trapped by local minima or a clever opponent.

4.4.3 The Alpha-Beta Procedure

This method prunes the minimax search space. Two values, alpha and beta, are assigned so that disadvantageous branches are ignored. The alpha value goes with MAX nodes and won't decrease so we can prune MAX grandchildren less than it; the beta goes with MIN nodes and won't increase.

4.5 Complexity Issues

Branching factor - average number of children of any state.

Number of states at depth n is B^n.

(Attribution: these entries are my notes and reflections on George Luger's Artificial Intelligence: Structures and Strategies for Complex Problem Solving. Some of what I write here may be direct from the text. In other words, if something you read strikes you as profound, original, or interesting, I probably didn't write it.)